Gitreceiver/TAMA-MCPUpdated Apr 2000

Gitreceiver/TAMA-MCPUpdated Apr 2000

Tama - AI-Powered Task Manager CLI ✨

中文 Tama is a Command-Line Interface (CLI) tool designed for managing tasks, enhanced with AI capabilities for task generation and expansion. It utilizes AI (specifically configured for DeepSeek models via their OpenAI-compatible API) to parse Product Requirements Documents (PRDs) and break down complex tasks into manageable subtasks.

Features

- Standard Task Management: Add, list, show details, update status, and remove tasks and subtasks.

- AI-Powered PRD Parsing: (

tama prd <filepath>) Automatically generate a structured task list from a.txtor.prdfile. - AI-Powered Task Expansion: (

tama expand <task_id>) Break down a high-level task into detailed subtasks using AI. - Dependency Checking: (

tama deps) Detect circular dependencies within your tasks. - Reporting: (

tama report [markdown|mermaid]) Generate task reports in Markdown table format or as a Mermaid dependency graph. - Code Stub Generation: (

tama gen-file <task_id>) Create placeholder code files based on task details. - Next Task Suggestion: (

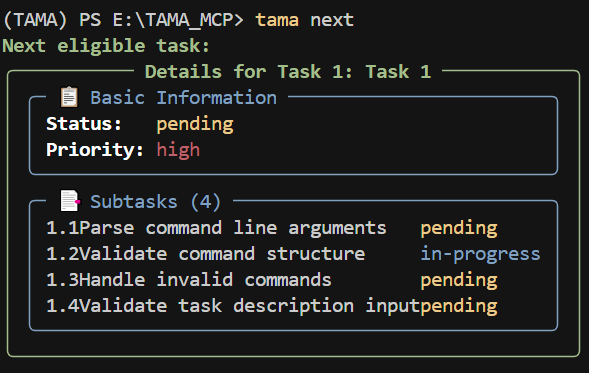

tama next) Identify the next actionable task based on status and dependencies. - Rich CLI Output: Uses

richfor formatted and visually appealing console output (e.g., tables, panels).

Installation & Setup

- Clone the Repository:

git clone https://github.com/Gitreceiver/TAMA-MCP

cd TAMA-MCP

- Create and Activate Virtual Environment(Recommend python 3.12):

uv venv -p 3.12

# Windows

.\.venv\Scripts\activate

# macOS/Linux

source .venv/bin/activate

- Install Dependencies & Project:

(Requires

uv- install withpip install uvif you don't have it)shell uv pip install .

(Alternatively, using pip: pip install .)

Configuration ⚙️

Tama requires API keys for its AI features.

- Create a

.envfile in the project root directory. - Add your DeepSeek API key:

# .env file

DEEPSEEK_API_KEY="your_deepseek_api_key_here"

(See .env.example for a template)

The application uses settings defined in src/config/settings.py, which loads variables from the .env file.

Usage 🚀

Tama commands are run from your terminal within the activated virtual environment. Core Commands:

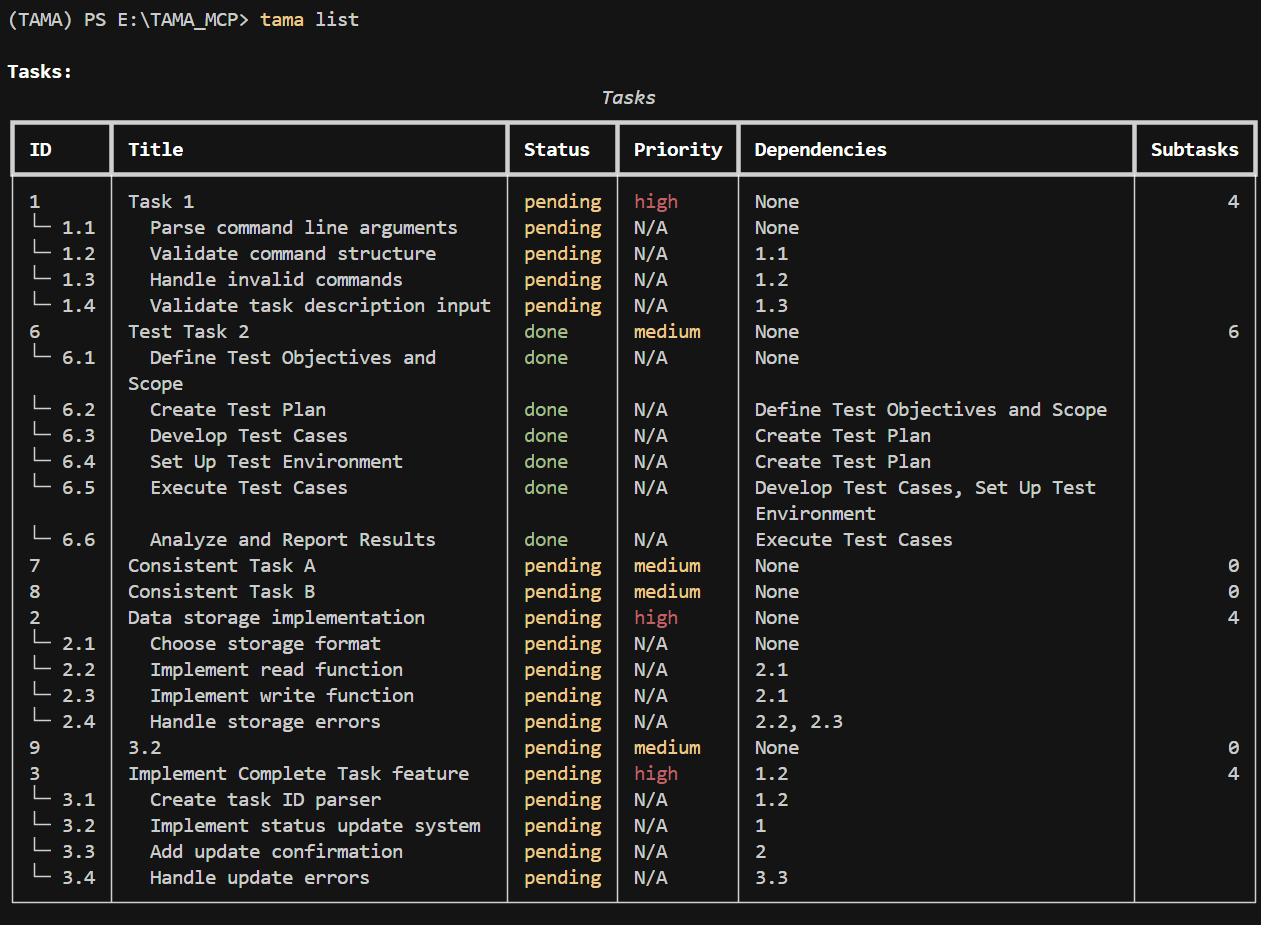

- List Tasks:

tama list

tama list --status pending --priority high # Filter

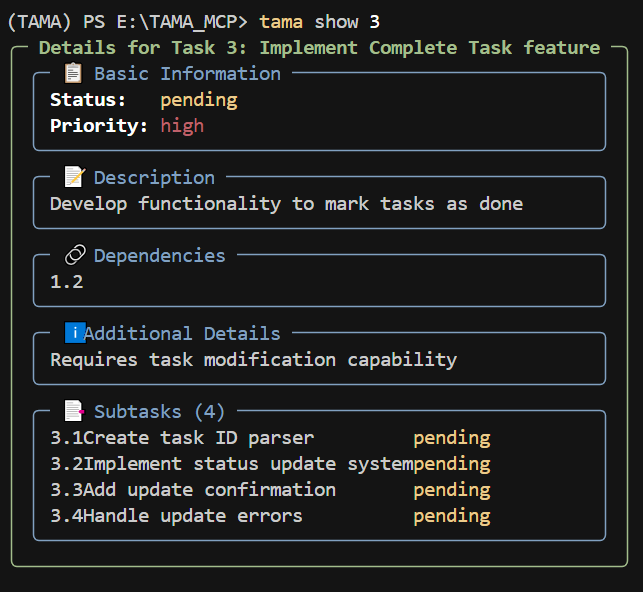

- Show Task Details:

tama show 1 # Show task 1

tama show 1.2 # Show subtask 2 of task 1

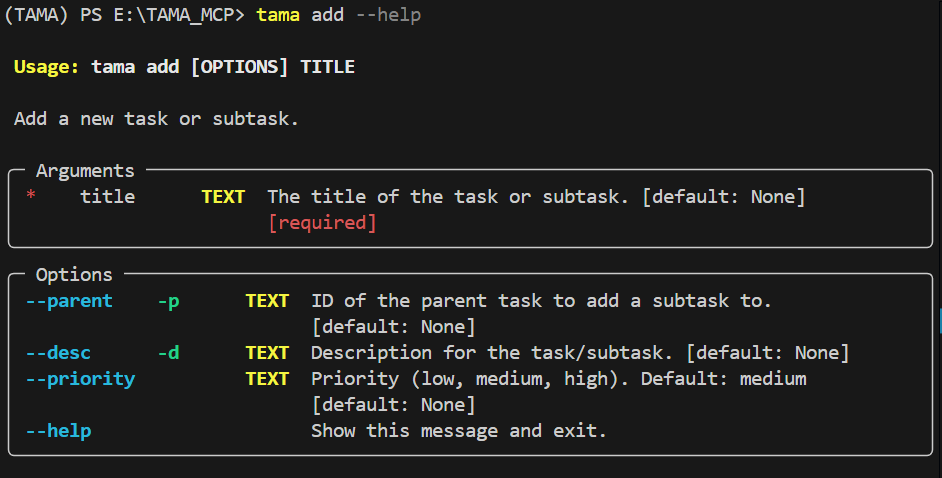

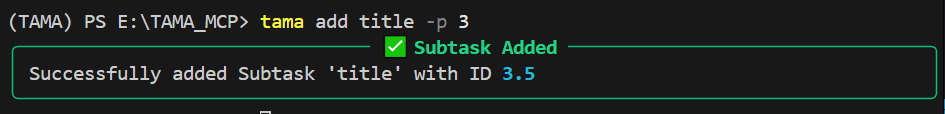

- Add Task/Subtask:

# Add a top-level task

tama add "Implement user authentication" --desc "Handle login and sessions" --priority high

# Add a subtask to task 1

tama add "Create login API endpoint" --parent 1 --desc "Needs JWT handling"

- Set Task Status:

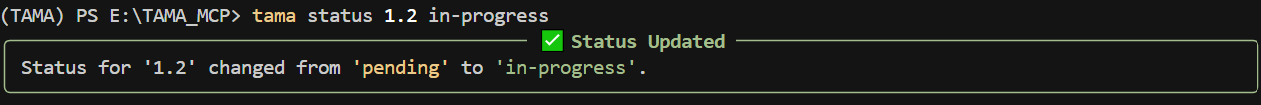

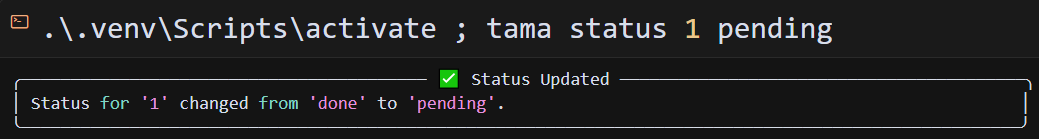

tama status 1 done

tama status 1.2 in-progress

(Valid statuses: pending, in-progress, done, deferred, blocked, review)

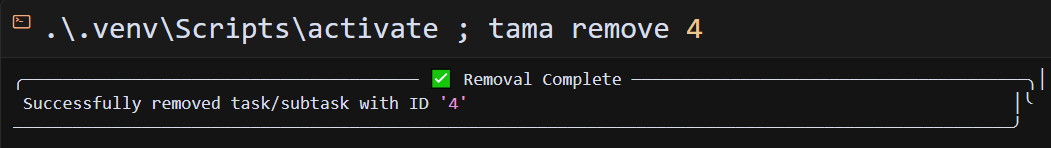

- Remove Task/Subtask:

tama remove 2

tama remove 1.3

- Find Next Task:

tama next

AI Commands:

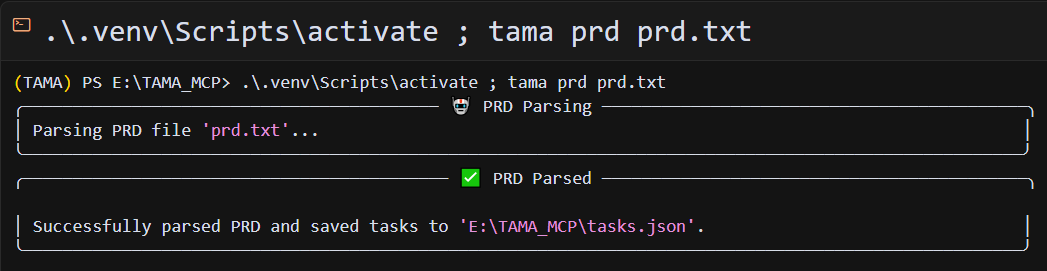

- Parse PRD: (Input file must be

.txtor.prd)

tama prd path/to/your/document.txt

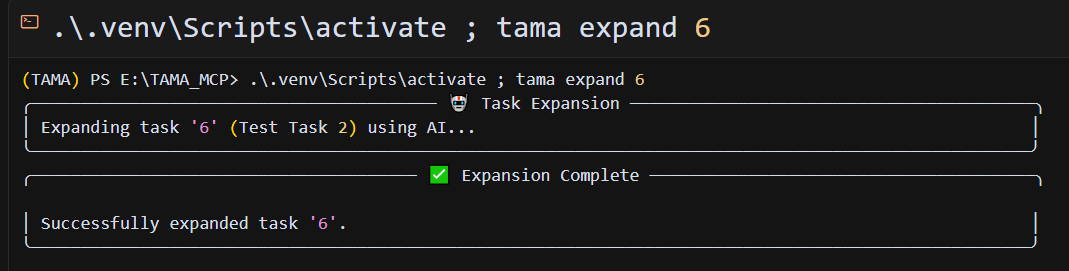

- Expand Task: (Provide a main task ID)

tama expand 1

Utility Commands:

- Check Dependencies:

tama deps

- Generate Report:

tama report markdown # Print markdown table to console

tama report mermaid # Print mermaid graph definition

tama report markdown --output report.md # Save to file

- Generate Placeholder File:

tama gen-file 1

tama gen-file 2 --output-dir src/generated

Shell Completion:

- Instructions for setting up shell completion can be obtained via:

tama --install-completion

(Note: This might require administrator privileges depending on your shell and OS settings)

Development 🔧

If you modify the source code, remember to reinstall the package to make the changes effective in the CLI:

uv pip install .

MCP Server Usage

Tama can be used as an MCP (Model Context Protocol) server, allowing other applications to interact with it programmatically. To start the server, run:

uv --directory /path/to/your/TAMA_MCP run python -m src.mcp_server

in your mcp client: (cline,cursor,claude)

{

"mcpServers": {

"TAMA-MCP-Server": {

"command": "uv",

"args": [

"--directory",

"/path/to/your/TAMA_MCP",

"run",

"python",

"-m",

"src.mcp_server"

],

"disabled": false,

"transportType": "stdio",

"timeout": 60

},

}

}

This will start the Tama MCP server, which provides the following tools

- get_task: Finds and returns a task or subtask by its ID.

- find_next_task: Finds the next available task to work on.

- set_task_status: Sets the status for a task or subtask.

- add_task: Adds a new main task.

- add_subtask: Adds a new subtask.

- remove_subtask: Removes a subtask.

- get_tasks_table_report: Generates a Markdown table representing the task structure.

License

MIT License This project is licensed under the MIT License. See the LICENSE file for details.

=======

TAMA-MCP

AI-Powered Task Manager CLI with MCP Server

Installation

{

"mcpServers": {

"TAMA-MCP-Server": {

"env": {},

"args": [

"--directory",

"/path/to/your/TAMA_MCP",

"run",

"python",

"-m",

"src.mcp_server"

],

"command": "uv"

}

}

}MCPLink

Seamless access to top MCP servers powering the future of AI integration.